At the beginning of this week, the Internet was amused by a story from Bashkiria – there an elderly man could not get home, having quarreled with a robot. A camera is installed at the entrance of the high-rise building, which recognizes all local residents by their faces and opens the door for them. But I didn't recognize the man. The pensioner argued for a couple of minutes, swearing, with a soulless machine. Shouted, boiled, and the robot monotonously answered: “I did not understand you” – “Stupid because, damn it!”. As a result, the person still won, the system recognized him.

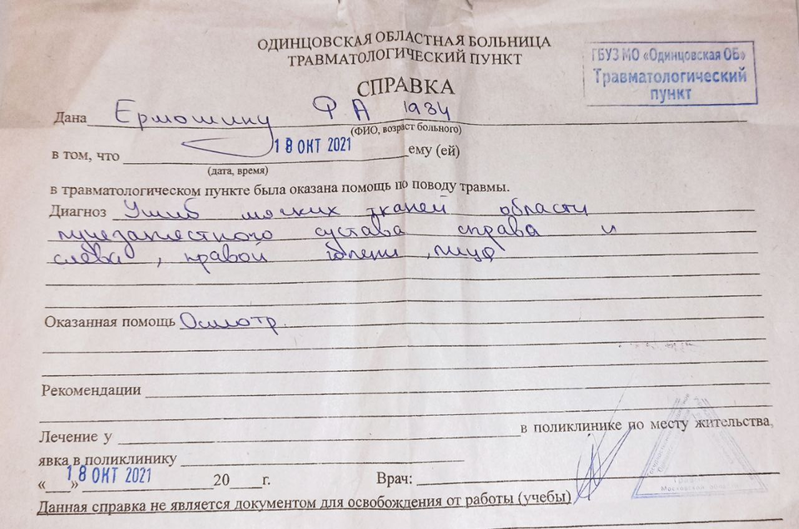

Then everyone laughed, wittily joked in the comments on the topic of “the uprising of the machines.” And a day or two later, a story happened in Moscow, after which there was no laughing matter at all. The 36-year-old Ph.D. in Philology Fyodor Yermoshin left the entrance in the morning, and three men in civilian clothes at once came to him. And they twisted their hands. They pushed them into the car, verbally saying that they were from the police, and offered to hand over the stolen game consoles to the candidate's case. And he does not know what such prefixes are.

Only a couple of hours later, already at the Strogino police station, it became clear that they had taken the wrong person. Yermoshin was shown a printout of the face recognition system – they say that the man's photo coincides by 70% with the image of a thief named Oleg. The Ministry of Internal Affairs announced the start of a check on the actions of police officers. But after this, of course, there are completely different moods in the comments: it turns out that in the place of the teacher – who was “handcuffed” in front of the neighbors, as a criminal of which – could any of us be ?!

70% MATCH – IS IT A LOT OR A LITTLE?

Recall that the face recognition system began to work actively in Moscow in 2018, during the FIFA World Cup, although it had been successfully tested before. Today it is an indispensable tool for finding criminals of all stripes. Take, for example, the recent cases when Dagestanis who beat a guy in the metro were found thanks to CCTV cameras, which two days later spotted young people on the streets of the capital.

And yet. Can the system really “glitch” and substitute respectable citizens?

“Any recognition system has an algorithm for comparing incoming objects and what is already included in the base,” Stanislav Ashmanov, CEO of Nanosemantics, explains to kp.ru. – That is, the system analyzes video streams from all cameras connected to it, captures faces. And now a new photo of a person comes in and a request goes to the machine: do you recognize him or not? The system checks, but never makes a specific decision. It only issues a report, a recommendation, informs if there is a coincidence of the person from the incoming picture with someone from the database. But what to do with this information further – the person decides. Surely the police have some prescribed instructions on this matter.

One of the popular comments under the articles about police officers from Strogino: 70% coincidence – is it a lot or a little?

“In face recognition systems, you can set the similarity threshold, at which the system will consider that the person in the image and in the database is the same,” says Dmitry Markov, CEO of VisionLabs to kp.ru. – Configurable similarity threshold depends on many factors. Under normal, or rather ideal, conditions, it is recommended to set the similarity threshold of at least 95%. However, you need to understand that there can be many nuances in industrial operation, and then the thresholds must be adjusted individually.

“We don’t know what the threshold of coincidences is spelled out in this program, what scale is there,” Stanislav Ashmanov continues. – For example, the subway video surveillance system, I know, was installed by several vendors (manufacturers, suppliers, – Auth.). In addition, there are many other factors. Different lighting, different equipment or picture quality. For example, it may be that the degree of similarity of my face in a fresh photo and six months ago will be estimated at just 70%. Also, yes, any system can have an error rate. If the threshold of coincidence is low, it would be more worth taking as a call from a grandmother from a nearby entrance, who assures that she saw a person who looked like a photo from an orientation. She might be right. But he may be wrong. This must be checked.

“WE WERE SURE THAT BIG BROTHER CANNOT BE MISTAKE”

According to expert Ashmanov, in this case, the “failure” could occur due to the fact that the police blindly trusted artificial intelligence:

“Those people may have been convinced that the system, Big Brother, couldn't be wrong.

– The face recognition system has been used in Moscow since 2018. It turns out that in three years the history of the candidate of sciences Fedor Yermoshin is the first such scandal?

– We found out about this case, because a person who got into this situation wrote a post on Facebook and he found many friends who made reposts. I think there were actually more such cases, just before that, with such a coincidence, people were correctly checked, apologized and released. And nobody posted on social media.

At the same time, the expert argues, when setting up any system, there are always risks of “twisting” or “not twisting”.

– Probably, if the system is wrong, and does not allow you to work, for example, with a bank card, this is one thing. And if she misses a dangerous criminal because of a too high coincidence threshold? But in any case, false positives are not so scary if the system for responding to them is adequate, the expert says.

Just a few words from the author. The face recognition system can be scolded as much as you want, but the fact that it is truly effective does not even need to be proven. Let me give you one example. 17 years ago, in 2004, in the vicinity of Aprelevka near Moscow, a man and a woman were killed. The suspects – a married couple – fled and disappeared without a trace.

The case seemed to be an eternal wood grouse. But suddenly the spouses were discovered by the same face recognition system. The man and woman have since changed their first names, surnames, years of birth and even citizenship. Then, already living in the Krasnodar Territory, we received Russian passports. And suddenly the car found them, criminals from 2004, comparing the photographs that citizens submit to obtain certain documents. When they were detained, they conducted a DNA examination with their relatives, it became clear that the system was doing just incredible things …

OPINION OF ATTORNEY AND FORMER INVESTIGATOR

“The human factor will always influence digital development”

The well-known lawyer Igor Bushmanov, who in the 90s worked as an investigator for especially important cases of the Prosecutor's Office of the Moscow Region, believes that the human factor worked in Yermoshin's history.

“Of course, the face recognition system itself is a very useful system that is being implemented all over the world,” he says. – And a large number of crimes committed in conditions of non-obviousness are now being solved thanks to her. The system produces this or that result, based on the biometric data of certain people. But how this data will then be used is already a question for law enforcement officers. If forceful actions have gone beyond the scope, an official check should be appointed and measures taken. (The Ministry of Internal Affairs has already reported that the Department of Internal Security is engaged in checking the actions of police officers, – Auth.). Whether we like it or not, the human factor will always influence the digital development of society.